2 May 2025

A Breakdown of OpenAI, Anthropic, Google, Grok, Groq, AWS and Cerebras Models Thus Far Available in Power Flow

The University of Nicosia is proud to offer faculty and staff access to some of the most powerful AI models available today through its advanced Powerflow tool.

Below is a concise summary of the five strongest models across OpenAI, Anthropic, Google, Groq, Grok, Amazon Bedrock and Cerebras selected for their intelligence, performance, and versatility. Task requirements should guide your choice: for complex reasoning and research workflows, opt for the most powerful models; for routine daily tasks—such as summarization or email drafting—a faster, more cost-efficient model is often preferable.

Most Powerful

| Model Name | Company | Context Window | Price (Approx.) | Key Strengths |

|---|---|---|---|---|

| GPT-5 Pro | OpenAI | 200K tokens | Input: 15.00; Output: 120.00 | The smartest and most precise model. |

| GPT-5.1 | OpenAI | 400k tokens | Input 1.25; Output 10.00 | The best model for coding and agentic tasks across industries. |

| Claude 4.1 Opus | Anthropic | 200k tokens | Input 15.00; Output 75.00 | Low-hallucination, tool-centric agents and long-running coding with traceable plans. Strongest Claude on coding and agentic execution; premium pricing. |

| Grok 4 | xAI | 256k tokens | Input 3.00; Output 15.00 | Complex reasoning, real-time search, multi-agent workflows, academic-level tasks. Scored highest on ARC-AGI-2, 100% on AIME 2025, topped Humanity’s Last Exam |

| Gemini 2.5 Pro | 1M tokens | Input: 1.25; Output: 10.00 | Excels in reasoning, coding, and multimodal tasks; supports text, audio, images, video, and code. |

Most Efficient for daily use

| Model Name | Company | Context Window | Price (Approx.) | Key Strengths |

|---|---|---|---|---|

| Gemini 2.5 Flash | 1M tokens | Input 0.15; Output 0.60 | Fastest model, with extremely low cost per token, and strong reasoning for its size. | |

| Grok 3 Mini Beta | xAI | 1M tokens | Input 0.30; Output 0.50 | Optimized for speed and efficiency; suitable for applications requiring quick, logical responses with lower computational costs. |

| Claude 4.5 Haiku | Anthropic | 200k tokens | Input 1.00; Output 5.00 | Near-frontier coding and reasoning at a fraction of Sonnet 4.5’s cost; ideal fast daily driver for production agents, chat assistants, customer support, and coding help where speed and price matter. |

| GPT-5 Mini | OpenAI | 400k tokens | Input 0.25; Output 4.40 | Smarter daily driver for coding and automation; cost-efficient when outputs are short/medium, with 400k context for larger docs. |

| GPT-5 Nano | OpenAI | 400k tokens | Input 0.05; Output 0.40 | Ultra-cheap for classification, routing, and concise summaries at scale. Ideal for high-QPS endpoints and autocomplete. |

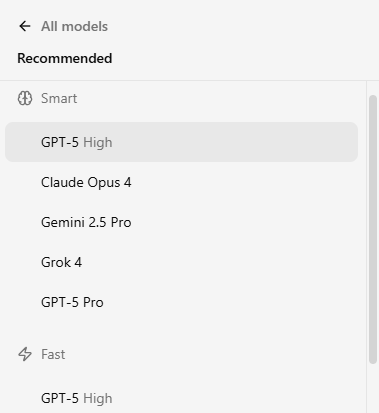

Using the Recommended Models List

To streamline your workflow, Powerflow features a 'Recommended' section at the top of the model selector, divided into:

- Smart: The most powerful models for complex reasoning, research, and advanced coding (GPT-5, Claude Opus 4, Gemini 2.5 Pro, Grok 4)

- Fast: The most efficient models for daily tasks, offering speed and cost-effectiveness (GPT-5 Mini, GPT-5 Nano, Grok 3 Mini Beta)

These recommendations align with the detailed breakdowns below, helping you quickly choose between maximum capability and optimal efficiency.

This section provides a detailed evaluation of the leading AI offerings from OpenAI, Anthropic, Google, and other major providers. Each model is analyzed in terms of its context capacity, cost efficiency, and benchmark performance, helping you select the optimal tool for your specific workflow needs. Continuous updates ensure you’re always working with the latest capabilities and pricing information.

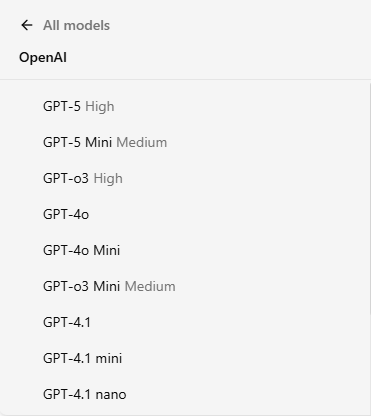

OpenAI

| Model Name | Context Window | Cost (USD per 1M tokens) | Usefulness (Examples) | Benchmark Notes |

|---|---|---|---|---|

| GPT-5 Pro | 400k tokens | Input: 15; Output: 120 | Highest-fidelity GPT-5 variant optimized for accuracy, complex problem-solving, extended reasoning tasks, advanced coding workflows, multi-step planning, healthcare analysis, financial modeling (SEC filings, three-statement models), legal document review, research synthesis, enterprise workflows requiring reduced hallucinations, and high-stakes tasks demanding maximum precision | GPT-5 Pro utilizes extended reasoning with higher compute budget per request. On SWE-bench Verified: GPT-5 achieves ~74.9% (Pro variant uses same base model with higher reasoning settings). On SWE-bench Pro: GPT-5 scores 23.3%. Hallucination reduction: ~80% fewer factual errors compared to o3 when using thinking mode. |

| GPT-5.1 | 400k tokens | Input: 1.25; Output: 10 | Best for whole-repo coding, agentic workflows (tools/search/code), and enterprise-grade research synthesis; handles very long specs and multi-file edits. | Significant improvements on math and coding evaluations like AIME 2025 and Codeforces from GPT-5. |

| GPT-5 | 400k tokens | Input: 1.25; Output: 10 | Best for whole-repo coding, agentic workflows (tools/search/code), and enterprise-grade research synthesis; handles very long specs and multi-file edits. | New SOTA across core evals: AIME 2025 94.6% (no tools), SWE-bench Verified 74.9%, Aider Polyglot 88%, strong MMMU 84.2% and HealthBench |

| o3-pro | 200k tokens | Input: 20; Output: 80 | Use when you need maximum deliberate reasoning on hard math/science proofs and high-stakes analysis; best “think longer” option if you’re standardized on the o-series. | Designed to tackle tough problems. The o3-pro model uses more compute to think harder and provide consistently better answers. |

| o3 | 128k tokens | Input: 10; Output: 40 | Best for deep scientific reasoning, advanced coding, and high-end math problem solving. | Powerful reasoning model that pushes the frontier across coding, math, science, visual perception |

| o4-Mini | 200k tokens | Input: 1.10; Output: 4.40 | Best for deep scientific reasoning, advanced coding, and high-end math problem solving. | Highest scores from OpenAI on MMLU-Pro, HumanEval, SciCode, and AIME 2024; best OpenAI model currently for general intelligence. |

| GPT-5 Mini | 400k tokens | Input: 0.25; Output: 4.40 | Fast, cheaper GPT-5 for production agents/coding that need accuracy without full GPT-5 cost; good for diffs, batch edits, and long context. | Shares GPT-5’s coding gains; 400k context per docs; positioned by OpenAI as the “faster/cheaper” size (slightly below GPT-5 on SOTA benches) |

| GPT-5.1 Codex | 400k tokens | Input: 1.25; Output: 10.00 | Specialized for code generation, building projects, feature development, debugging, large-scale refactoring, and code review. Optimized for coding agents and produces cleaner, higher-quality code outputs. | Direct successor model of GPT-5 Codex; same purpose, newer brain, better results. |

| GPT-5 Codex | 400k tokens | Input: 1.25; Output: 10.00 | Specialized for code generation, building projects, feature development, debugging, large-scale refactoring, and code review. Optimized for coding agents and produces cleaner, higher-quality code outputs. | SGPT-5 variant optimized specifically for coding workflows; excels at internal refactor benchmarks (51.3% vs GPT-5's 33.9%), similar SWE-bench Verified performance to base GPT-5 (~74.9%), exceptional at building projects and feature development with cleaner code outputs. |

| o3-Mini | 200K tokens | Input: 1.10; Output: 4.40 | Best for deep reasoning, advanced problem-solving, complex coding, and AI research. | High intelligence ranking (63); strong in logic-heavy and analytical tasks. |

| GPT-5 Nano | 400K tokens | Input: 0.05; Output: 0.40 | Ultra-cheap/fast for classification, routing, summaries, autocomplete; ideal brain for high-QPS endpoints. | Official compare page lists 400k context; meant for speed/cost, not flagship SOTA |

| GPT-4.1 | 1.00M tokens | Input: 2.00; Output: 8.00 | Suitable for coding workflows, general QA bots, and academic tasks with long context needs. | Middle-tier performance in reasoning and coding tasks |

| GPT-4.1 mini | 1.00M tokens | Input: 0.40; Output: 1.60 | Budget-friendly for educational tools, basic automation, and medium-complexity writing tasks. | Middle-tier performance in reasoning and coding tasks. Faster than GPT-4.1 |

| GPT-4o | 128K tokens | Input: 3.00; Output: 12.00 | Best for multimodal tasks (text, images, and audio); useful for language translation and chatbots. | Good general-purpose model but not the best in raw intelligence or reasoning. Pricey as well. Second most expensive model after GPT-o1 |

| GPT-4.1 nano | 1M tokens | Input: 0.10; Output: 0.40 | Ultra-cheap for simple classification, data tagging, and light summarization. | Lower performance across all benchmarks; designed for low-cost, high-speed tasks. |

| GPT-4o Mini | 128K tokens | Input: 0.15; Output: 0.60 | Budget-friendly AI for customer support, simple chatbots, and content generation. | More affordable than GPT-4o but significantly weaker in intelligence and reasoning. |

- Use GPT-5 pro when you truly need the longest, most careful “think hard” reasoning (it replaces o3-pro for that role).

- Pick GPT-5.1 for cost-efficient reasoning at scale (math/coding/vision) and high-throughput workloads.

- Reach for GPT-4.1 / 4.1 mini when the job demands 1M-token context (giant codebases, litigations, multi-doc synthesis) at a lower price than GPT-5.

- Choose GPT-4o if your priority is robust multimodal (text-image-audio) and real-time interactivity in the API.

- Use GPT-5 nano for the cheapest, fastest routing, tagging, and brief summaries (swap out 4.1 nano unless you need its specific behavior).

- Use GPT-5 (default) for end-to-end coding, agentic workflows, and complex research synthesis — it’s OpenAI’s current frontier model with new SOTA results across key benchmarks.

Deep Research Models

- GPT-o3 Deep Research – Purpose-built for long-form analytical reasoning, scientific exploration, and cross-document synthesis. Ideal for academic, legal, and technical research where depth and accuracy outweigh speed.

- GPT-o4 Mini Deep Research – A lighter, faster Deep Research model for continuous knowledge discovery, summarization, and daily intelligence gathering. Optimized for cost-efficient retrieval and sustained context handling.

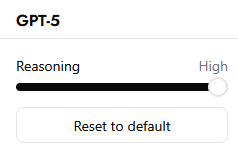

-

low: Maximizes speed and conserves tokens, but produces less comprehensive reasoning.

-

medium: The default, providing a balance between speed and reasoning accuracy.

-

high: Focuses on the most thorough line of reasoning, at the cost of extra tokens and slower responses.

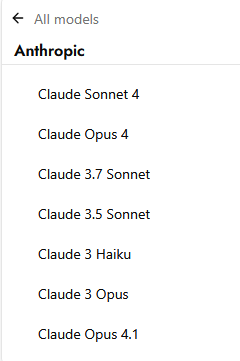

Anthropic

| Model Name | Context Window | Cost (USD per 1M Tokens) | Usefulness (Examples) | Benchmark Notes |

|---|---|---|---|---|

| Claude 4.5 Sonnet | 1m tokens | Input: 3.00; Output: 15.00 | Strong general-purpose model for agentic coding, terminal and tool use, deep math reasoning, and realistic computer-use tasks. | State-of-the-art scores on SWE-bench Verified and OSWorld with perfect AIME 2025 (with tools) and very strong GPQA Diamond performance, putting it among the top reasoning models today. |

| Claude 4.1 Opus | 200K tokens | Input: 15.00; Output: 75.00 | Best for low-hallucination, tool-centric agents and long-running coding workflows: multi-repo refactors, governed JSON/function calls, retrieval-grounded analysis, and day-long autonomous runs with traceable plans. | New high on coding: SWE-bench Verified 74.5%; continues strong agentic execution (Opus 4 posts Terminal-Bench ≈43.2% ± 1.3; 4.1 is the direct upgrade). |

| Claude 4 Opus | 200K tokens | Input: 15.00; Output: 75.00 | Excels at coding, with sustained performance on complex, long-running tasks and agent workflows. Use cases include advanced coding work, autonomous AI agents, agentic search and research, tasks that require complex problem solving | Leads prior generation: SWE-bench Verified 72.5%; Terminal-Bench 43.2% ± 1.3. |

| Claude 4.5 Haiku | 200k tokens | Input: 1.00; Output: 5.00 | Fast, cost-efficient model for production agents that need strong coding, tool use, math, and multilingual Q&A without the full Sonnet footprint | Scores in the mid-70s on SWE-bench Verified and t2-bench, with high marks on AIME 2025, GPQA Diamond, MMLU, and visual reasoning, landing just below Sonnet 4.5 in overall capability while remaining highly competitive. |

| Claude 4 Sonnet | 200K tokens | Input: 3.00; Output: 15.00 | Claude Sonnet 4 significantly improves on Sonnet 3.7's industry-leading capabilities, excelling in coding with a state-of-the-art 72.7% on SWE-bench. | Faster and cheaper than Opus. Only just behind it in benchmarks but by a tiny fraction. |

| Claude 3.7 Sonnet | 200K tokens | Input: 3.00; Output: 15.00 | The latest top-tier Claude model; excels in advanced coding, reasoning, and tasks. | Outperforms previous 3.5 Sonnet in intelligence/coding benchmarks (per new data). |

| Claude 3.5 Sonnet | 200K tokens | Input: 3.00; Output: 15.00 | Top-performing Claude model; excels in advanced coding, reasoning, and complex tasks. | Formerly best in code generation and MMLU; now slightly behind 3.7. |

| Claude 3 Opus | 200K tokens | Input: 15.00; Output: 75.00 | Deep analytical reasoning, high-level research, advanced problem-solving. | Previously the most capable Claude, now slightly behind 3.5 Sonnet (New). |

| Claude 3 Haiku | 200K tokens | Input: 0.25; Output: 1.25 | Cost-effective, lightweight AI ideal for chatbots and summarization. | Lower power but highly affordable and efficient for simple tasks. |

- Claude 4 Opus: For the absolute best coding, math, and complex reasoning tasks based on new data.

- Claude 4 Sonnet: Excels in advanced coding and workflows.

- Claude 3.5 Haiku: Perfect for quick, cost-conscious tasks like chatbots and summarization.

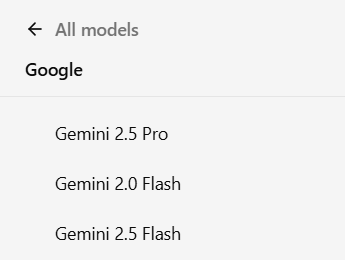

| Model Name | Context Window | Cost (USD per 1M Tokens) | Usefulness (Examples) | Benchmark Notes |

|---|---|---|---|---|

| Gemini 2.5 Pro | 1M tokens | Input: 1.25; Output: 10.00 | Best for complex reasoning, coding, and multimodal tasks (text + image). Strong in creative writing and logic-heavy tasks. | Google's most capable public model |

| Gemini 2.5 Flash | 1M tokens | Input: 0.15; Output: 0.60 | Fast, cost‑efficient chatbots, real‑time summarisation, automation, and “thinking‑view” agent workflows; light multimodal support. | MMLU 80.9 % & Intelligence Index 53; LMArena creative‑writing 1431; ~284 tokens/s output speed; 0.28 s TTFT latency |

| Gemini 2.0 Flash | 1M tokens | Input: 0.10; Output: 0.40 | Optimized for real-time AI interactions, chatbots, and automation. | Lower power but highly efficient for fast-response applications. |

- Gemini 2.5 Pro: best for complex reasoning, creative writing and high-end applications.

- Gemini Flash variants: ideal for real-time AI interactions, fast automation, high-speed summarization, and cost-effective deployments.

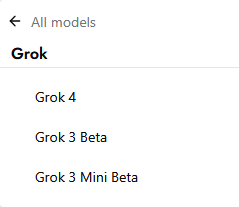

xAI

| Model Name | Context Window | Cost (USD per 1M Tokens) | Usefulness (Examples) | Benchmark Notes |

|---|---|---|---|---|

| Grok 4 | 256k tokens | Input 3.00; Output 15.00 | Complex reasoning, real-time search, multi-agent workflows, academic-level tasks | Scored highest on ARC-AGI-2, 100% on AIME 2025, topped Humanity’s Last Exam, slower output speed (~83.6 tokens/sec) |

| Grok 3 Beta | 1M tokens | Input 3.00; Output 15.00 | Advanced reasoning, STEM tasks, real-time research, large document processing | Achieved 93.3% on AIME 2025 and 84.6% on GPQA; Elo score of 1402 on LMArena; trained with 10x compute over Grok 2; excels in long-context reasoning and complex problem-solving. |

| Grok 3 Mini Beta | 1M tokens | Input 0.3; Output 0.5 | Cost-effective reasoning, logic-based tasks, faster response times | Optimized for speed and efficiency; suitable for applications requiring quick, logical responses with lower computational costs. |

Which Grok model should you use?

- Grok 3 Beta: ideal for deep reasoning, research-heavy workflows, complex STEM tasks, and long document understanding.

- Grok 3 Mini Beta: great for cost-effective automation, quick logic-based tasks, and high-speed chatbot applications.

- Grok 4: for the toughest reasoning, multi-agent orchestration, and real-time search on high-stakes tasks. Choose it when you want peak accuracy and can accept 256k context and slower throughput.

Groq-Based Models

| Model Name | Context Window | Cost (USD per 1M Tokens) | Usefulness (Examples) | Benchmark Notes |

|---|---|---|---|---|

| LLama 3.3 (70B, 128K) | 128K tokens | Input: 0.59; Output: 0.79 | Reading lengthy academic papers, detailed Q&A | Comparable in context size to OpenAI o1-mini or Gemini Pro (up to 128K). Slightly behind top OpenAI/Anthropic models in raw intelligence. |

| LLama 3.1 (8B) | 8K tokens | Input: 0.05; Output: 0.08 | Lightweight tutoring or instruction-based chat | Similar to GPT-4o Mini or Claude Haiku but not suitable for large or highly complex tasks. |

-

LLaMA 3.3 (70B, 128K): Best for long documents (128K tokens) if you need a moderate level of coding/logic.

Bedrock Models

| Model Name | Context Window | Cost (USD per 1M Tokens) | Usefulness (Examples) | Benchmark Notes |

|---|---|---|---|---|

| Nova Pro | 300k tokens | Input: 0.8; Output: 3.2 | Advanced multimodal tasks, including text, image, and video processing; suitable for complex agentic workflows and document analysis. | Achieved competitive performance on key benchmarks, offering a balance between cost and capability. |

| Nova Lite | 300k tokens | Input: 0.06; Output: 0.24 | Real-time interactions, document analysis, and visual question answering; optimized for speed and efficiency. | Demonstrated faster output speeds and lower latency compared to average, with a context window of 300K tokens. |

| Nova Micro | 128k tokens | Input: 0.04; Output: 0.14 | Text-only tasks such as summarization, translation, and interactive chat; excels in low-latency applications. | Offers the lowest latency responses in the Nova family, with a context window of 128K tokens. |

| Titan Text G1 – Lite | 4K tokens | Input: 0.15; Output: 0.20 | Quick writing, summaries, generating simple documents or standard forms | Similar to GPT-4o Mini or Claude 3.5 Haiku. Note the 4K context is much smaller than many OpenAI/Anthropic/Gemini options (up to 200K). |

| Titan Text G1 – Express | 8K tokens | Input: 0.20; Output: 0.60 | Mid-range tasks: summarizing reports, assisting enterprise communication | Closer Gemini Flash in complexity. The 8K context is still smaller than top models’ 128K/200K capacities. |

Which bedrock model should you use?

- Nova Pro is Amazon’s flagship model, offering advanced multimodal capabilities suitable for complex tasks requiring integration of text, image, and video inputs. GPT-4o demonstrated a slight advantage in accuracy but Nova Pro outperforms GPT-4o in efficiency, operating 97% faster while being 65.26% more cost-effective.

- Nova Lite provides a cost-effective solution for tasks requiring real-time processing and document analysis, with a balance between performance and affordability.

- Nova Micro is optimized for speed and low-latency applications, making it ideal for tasks like summarization and translation where quick responses are essential.

- Titan Text G1 – Lite and Express are designed for simpler tasks with smaller context windows, suitable for generating standard documents and assisting in enterprise communications.

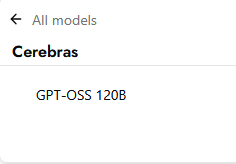

Cerebras Models

| Model Name | Context Window | Cost (USD per 1M Tokens) | Usefulness (Examples) | Benchmark Notes |

|---|---|---|---|---|

| GPT-OSS 120B | 130k tokens | Input: 0.15; Output: 0.60 | High reasoning model,great for on-prem/VPC deployments, agent/tool-heavy apps (function calling, retrieval-grounded analysis), and production coding workflows when you want near–o4-mini quality without closed-model constraints | gpt-oss-120b outperforms OpenAI o3‑mini and matches or exceeds OpenAI o4-mini on competition coding (Codeforces), general problem solving (MMLU and HLE) and tool calling (TauBench). It furthermore does even better than o4-mini on health-related queries (HealthBench) and competition mathematics (AIME 2024 & 2025) |

Conclusion

Model descriptions compiled by Konstantinos Vassos